1. Introduction

Nonparametric statistics is a field that has been rapidly developing over the last decade. Its development has been aided by the various benefits it has relative to classical statistical techniques:

- We can relax assumptions on the probability distribution of the data. Most notably, the normality.

- There are cases in which classical procedures are neither applicable nor interpretable but a nonparametric one is.

- Modern computing has empowered many of these computationally expensive techniques.

So far, when approaching statistics problems, we’ve assumed the distribution of data. However, we rarely ever understand the data enough to confidently assume a distribution. We will cover Kernel Density Estimation (KDE), a non-parametric estimation technique for any distribution f(x). We will start with an explanation and mathematical form of a kernel density estimator, go over its properties, and touch on the burgeoning field of bandwidth selection.

2. History

The concept of KDE was created by Parzen (1962) and Rosenblatt (1956) in their independent works, so it is also called Parzen-Rosenblatt window method in other fields such as signal processing and econometrics.

3. Some Intuition

The Histogram

Non-parametric density estimation may sound very alien but in fact it’s so commonplace that we’ve already seen it countless times! In high school, and even earlier, we’ve come across the . Turns out, they are non-parametric density estimators.

We split our data into into equally sized bins/intervals with boundaries and estimate the density in bin i as the proportion of observations that fall within . Let be the number of observations within interval and be the number of bins, for a histogram from distribution with sample size :

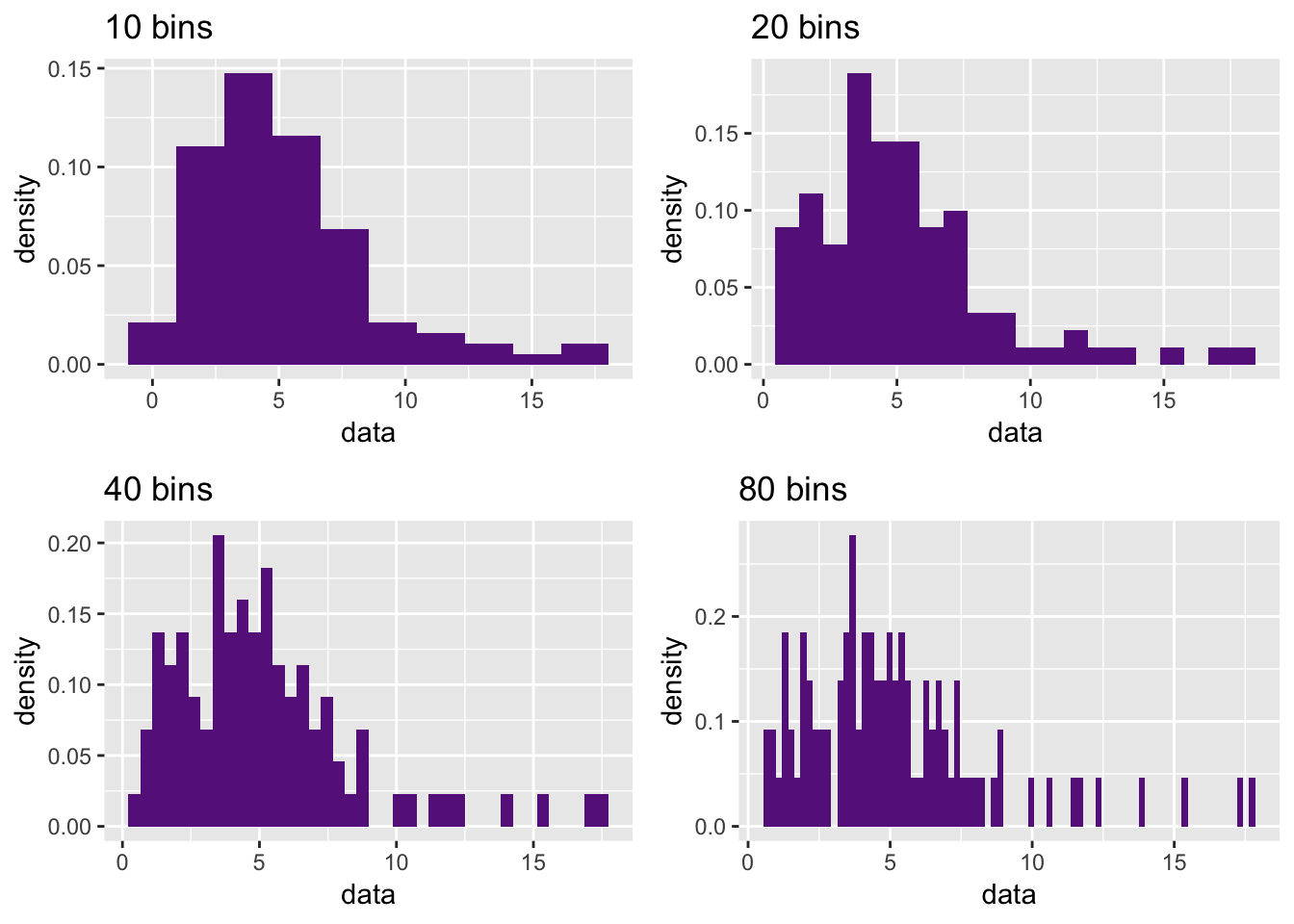

However, there are problems with this. First, histograms tend to be blocky and sensitive to bins chosen.

Show Source

Also, histograms are inherently local; I can have an observation not counted in interval .

Kernel Density Estimation

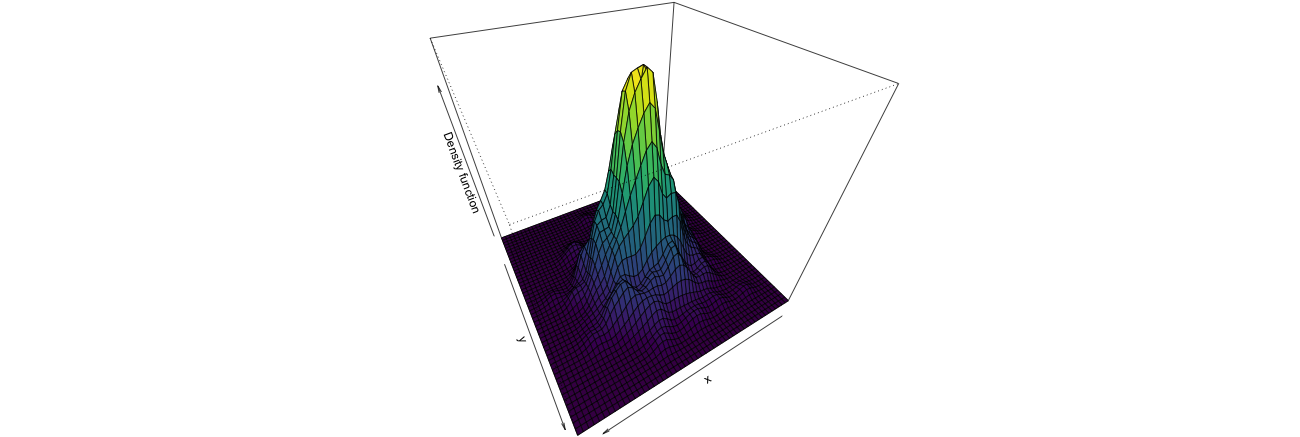

A kernel density estimate, , looks at some some point and the window , where is chosen bandwith, and counts the observations in the window.

We transform the initial equation with into a “distance” of surrounding X_i away from our point of interest s weighted by bandwidth, . Thus, instead of fixed bins, we have a moving window and can weigh accurately. However the roughness remains due to weighing each point in the window equally. You can think of a kernel function as a weighting function. Now, instead of weighing all distances from the same, we can apply a smooth kernel function such as a Gaussian (normal) function which will weight smaller distances more and larger distances less towards the density at .

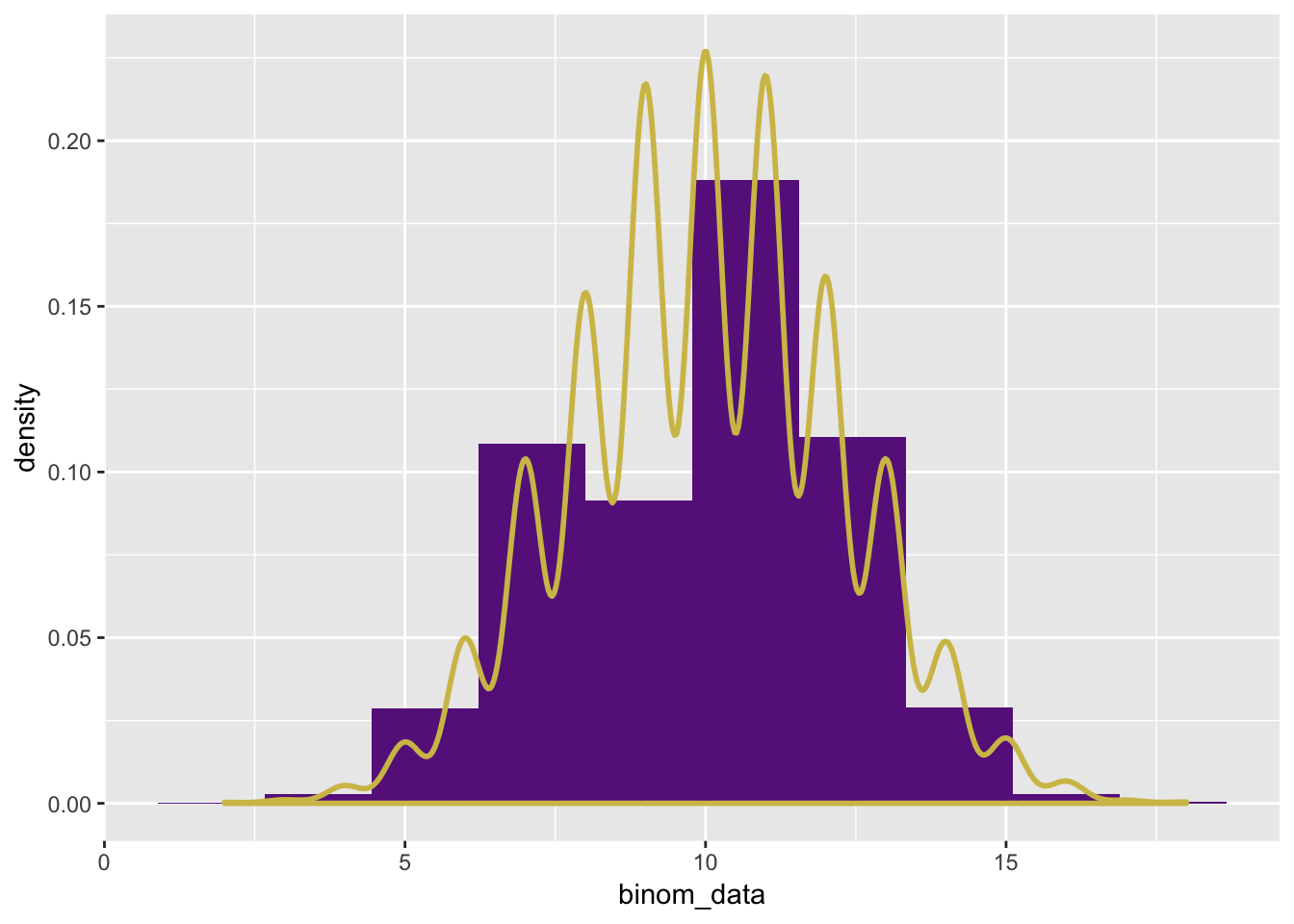

Above, is our kernel/weighting function. The gaussian kernel function is very apparent in this low bandwidth () computation below. We look at a sample from Binom.

Show Source

4. Theory and Properties

To allow for our analysis of KDE properties, we will outline a few rules about the Kernel and underlying density function.

- Kernel function : is symmetric about 0, , and

- and

- PDF : is Lipschitz Continuous,

Applications of assumptions will be notated in brackets (i.e: [1]). Proof is adapted from Wasserman (2006) and simplified.

Bias

As with most estimators, we want to account for bias. For , the expected value of the kernel density estimate at : We set , substitute, and apply a order Taylor series expansion for about :

is some function that as , is negligible compared to . Plugging in our Taylor approximation for :

From this we can see that the lower bandwidth we choose, the less bias we get. We also get the inisght that bias is highest at points where the curvature is very high, such as at a sharp peak. This is pretty apparent when we think of high KDE tries to smooth around these rough edges in the data.

Variance

Similarly, we find the upper bound for variance of estimated density, at some point :

We now substitute and approximate via order Taylor series expansion about :

Because is the other function of in this expression, we say that as and , is some function that is negligible compared to . We observe that the variance of our kernel density estimate is high at points of high density in the true distribution, . We also see that increasing either sample size or bandwidth decreases this upper bound.

Bringing it Together: MSE

Knowing both bias and variance of KDE predictors, it’s natural to look towards computing the Mean Squared Error (MSE).

The Mean Squared Error can be treated as a risk function similar to what we saw in Bayesian predictors. is the . With this it’s quite straightforward to optimize with respect to .

5. Choosing Bandwidth

Bandwidth is similar to bin width in histograms. Bandwidth determines how smooth the KDE curves can be. If the chosen bandwidth is very small, the curve will be high variance; this case is called undersmoothing. If the chosen bandwidth is too large, however, the curve will have high bias and we are oversmoothing the curve.

Because an appropriate size of bandwidth yields optimal results of estimation, bandwidth selection is a very important topic. If we choose a correct bandwidth, we will be able to estimate the underlying distribution, which neither wiggles too much (with a very small bandwidth) nor loses its characteristics (with a very large bandwidth).

Although there are many different bandwidth selection methods, the main idea of these methods is to minimize the asymptotic mean integrated square error (AMISE). You might’ve thought that we’ve already found the optimal , but previously we only found the for a single point . To do this for the entire distribution we must optimize on the same AMSE just integral along all value of the distribution. We provide the tools below and leave this as a simple exercise for the reader:

In the end, you should find is dependent on the overall curvature of the underlying distribution . Despite the age of the KDE concept, many of the advances in KDE are within the last decade in the field of bandwidth selection. If you find a good way to estimate or the overall curvature, , prepare to get published. See Wang and Zambom (2019) and Goldenshluger, Lepski, and others (2011).

References

Goldenshluger, Alexander, Oleg Lepski, and others. 2011. “Bandwidth Selection in Kernel Density Estimation: Oracle Inequalities and Adaptive Minimax Optimality.” The Annals of Statistics 39 (3). Institute of Mathematical Statistics: 1608–32.

Parzen, Emanuel. 1962. “On Estimation of a Probability Density Function and Mode.” The Annals of Mathematical Statistics 33 (3). JSTOR: 1065–76.

Rosenblatt, Murray. 1956. “Remarks on Some Nonparametric Estimates of a Density Function.” The Annals of Mathematical Statistics. JSTOR, 832–37.

Wang, Qing, and Adriano Z Zambom. 2019. “Subsampling-Extrapolation Bandwidth Selection in Bivariate Kernel Density Estimation.” Journal of Statistical Computation and Simulation. Taylor & Francis, 1–20.

Wasserman, Larry. 2006. All of Nonparametric Statistics. Springer Science & Business Media.